******************************************************************************

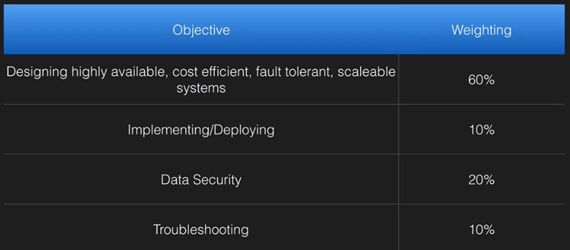

* Description: Knowledge of AWS solution architect associate certificate exam

* Date: 05:35 PM EST, 09/02/2017

******************************************************************************

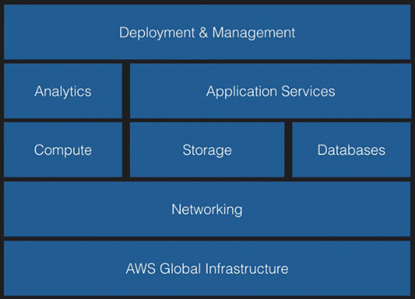

<1> AWS services covered within the exam:

|

|__ 1) Messaging:

| |

| |__ o. SNS: simple notification service

|

|__ 2) Desktop and App streaming:

| |

| |__ o. Workspace:

|

|__ 3) Security & identity:

| |

| |__ o. IAM:

| |

| |__ o. Inspector: An agent on virtual machine, security report [not too much in this exam].

| |

| |__ o. Certificate Manager: SSL, domain name.

| |

| |__ o. Directory Service: Active directory to AWS [import].

| |

| |__ o. WAF - web application firewall. Protection to website. Network/application level protection, such as injuring SQL.

| |

| |__ o. Artifacts - method getting AWS document from console.

|

|

|__ 4) Management tools:

| |

| |__ o. CloudWatch: Monitoring performance. EC2 disk/CPU utilization.

| |

| |__ o. CloudFormation: Turn your IT infrastructure into code to describe your IT infrastructure component, such as Physical firewall, network switch, physical machine.

| | One single command create the whole IT environment.

| |

| |__ o. CloudTrail: Auditing activties, and changes within the IT environment.

| |

| |__ o. Config: Automatically warning.

| |

| |__ o. OpsWorks: Automating deployment using chef.

| |

| |__ o. Service Catalog: Larger enterprise, sepecific server used by the company authorization. [Not exam topic].

| |

| |__ o. Trusted Advisor: Automating check your environment health - disk fault tolence.

| |

| |__ o. Managed Services:

|

|

|__ 5) Storage:

| |

| |__ o. S3: Object based.

| |

| |__ o. Glacier: Low cost to store unfrequently visited files, but the fetching speed would be slow.

| |

| |__ o. EFS: Block based.

| |

| |__ o. Storage Gateway: A virtual server that you setup from AWS console for connecting your data center to cloud.

| It provides seamless and secure integration between an organization's on-premises IT environment and AWS's storage infrastructure.

|

|

|__ 6) Database:

| |

| |__ o. RDS: relational database service.

| |

| |__ o. DynamoDB: non-relational database, no-sql, super high performance - more on developer exam.

| |

| |__ o. Redshift: data warehouse service - big data.

| |

| |__ o. Elasticache: caching data in cloud.

|

|

|

|__ 7) Networking & content Delivery:

| |

| |__ o. VPC: Virtual Priviate Cloud. A virtual data center.

| |

| |__ o. Route53: AWS DNS service. User can register Domain Name here. Port 53 is for DNS service.

| |

| |__ o. Direct Connect: A dedicate network line from physical data center to cloud - security, reliable internet.

| |

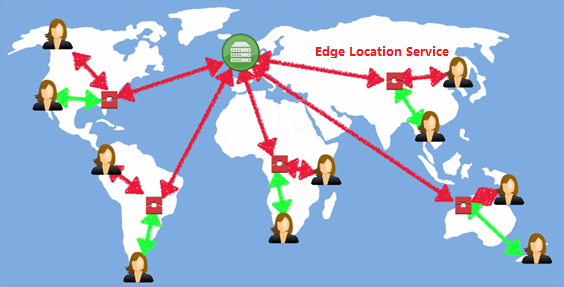

| |__ o. CloudFront: A distribution allows you to distribute content using a worldwide network of edge locations that provide low latency and high data transfer speeds.

|

|

|__ 8) Compute:

| |

| |__ o. EC2:

| |

| |__ o. EC2 Container:

| |

| |__ o. Elastic Beanstalk: For developers more, architect solution not too much. Generally, analyze developer's code, and design infrastructure.

| |

| |__ o. Lambda: serverless - upload your code will execute automatically. Not in exam.

| |

| |__ o. Lightsail: Wordpress blog pulishment purpose. Not in exam.

|

|

|__ 9) AWS global infrastructure:

| |

| |__ o. Regions: a geographical area consists of 2 availability zones.

| |

| |__ o. Availability zones: simply a data center within one Regions. AZ in same region is close to keep latency low.

| |

| |__ o. Edge location: content delivery network end points for cloudfront. Basically, cache large size media files, such as videos.

| If you watch video in NY, but request from China. EL will cache for you. Edge Location count is more than region.

|

|__ 10) Migration:

| |

| |__ o. Snowball: export/import - bundle disk transfer to cloud S3, EBS virtual disk. IDA. Enterprise level.

| |

| |__ o. DMS: Database migration service allows migrate primary database to cloud.

| | For example, migrate Oracle database from your physical data center to AWS RDS/Arura to get rid of Oracle licensing fee. No down time replication.

| |

| |__ o. SMS: Server migration service - move virtual machine.

|

|

|__ 11) Analytics Service:

|

|__ o. Athena: In your S3 bucket, you have lots of .csv/.json files.

| This service will allow you SQL query the data within the file to turn the flat txt file into searchable database.

|

|__ o. EMR: big data processing (high level, and how to access is enough).

|

|__ o. Cloud search: search engine for your website.

|

|__ o. Elasticsearch Service:

|

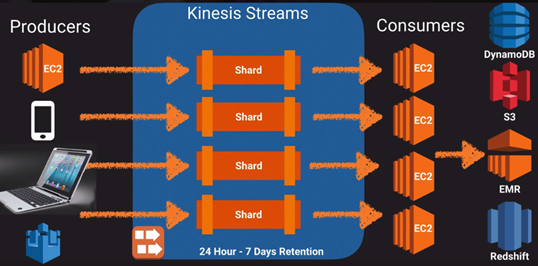

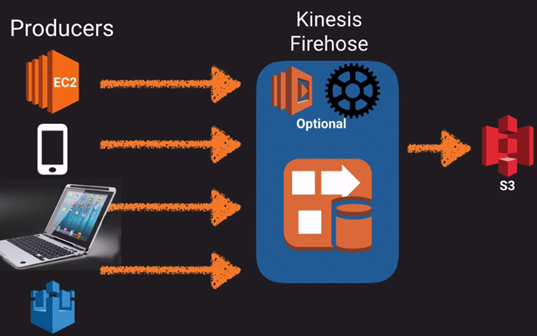

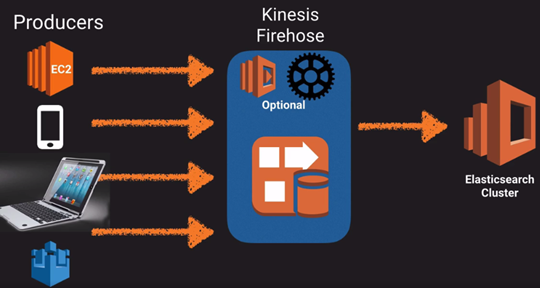

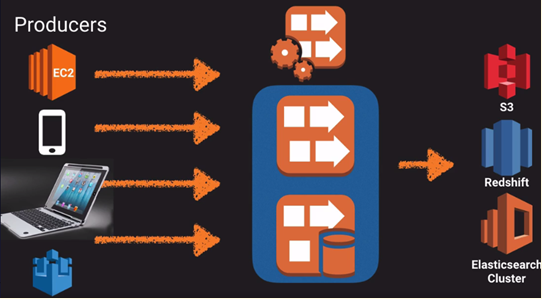

|__ o. Kinesis: Streaming real time data. Process TB data per hour.

|

|__ o. Data Pipeline: move S3 to DynamoDB. Not too much for associate.

|

|__ o. QuickSight: Business analysis tool. Create dashborad for your data.

<2> Massive Concept:

1) A region is a geographical area. Each region consists of 2 availability zonee, and a AZ is simple a data center. AZ in same region is close to keep latency low.

2) Edge Location: content delivery network end points for cloudfront. Basically, cache large size media files, such as videos.

EL count is more than region. If the video that you watch saved in a server located in NY, but the request from China.

As long as a user visit the video in China. Then, the EL in China will cache for second user.

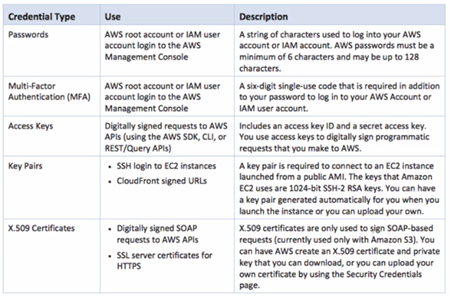

<3> Identity Access Management[IAM] - allow you to manage users and their level of access to the AWS console:

o. IAM is global/universal. It does not apply any particular region.

o. Customize AWS console URL on webpage.

o. Root user account is the one you created with Email.

o. New user has no permission.

o. Access KEY ID & Seceret Access Key are only for API, not for console login.

o. Access KEY ID & Seceret Access Key are one-time generated. New one must be recreated.

o. Always setup Multi-Factor Authentication on your root account. [ Google authenticator ]

o. Admin can customize your own password policy. Exa, length, up/lower case, numeric.

o. Power user - can access to any AWS services except the management of groups and users within IAM.

o. centralised control of your AWS account.

o. shared access to your AWS account.

o. Granular permission.

o. identity federation (including active directory, facebook, linkedin etc).

o. multifactor authentication.

o. provide temporary access for users/devices and services where necessary.

o. allows you to set up your own password rotation policy.

o. integrates with many different AWS services.

o. support PCI DSS Compliance.

o. users.

o. groups - a collection of users under one set of permissions.

o. roles - you create roles and can assign them to AWS resources.

o. policies - a document that defines one or more permission. Such as Oracle user profile.

o. region - only global.

o. console DNS - customize.

o. activate (multiple factor authertication)MFA on your root account - root account is the email that you sign up/in.

o. Enable MFA - Google authanticator.

o. IAM user console URL - https://91932334972.signin.aws.amazon.com/console

<4> Storage - Simple Storage Service[S3] - provides secure, durable, highly-scalable object storage:

o. Object based storage only, such video, flat files, pdf, word, image.

o. The data is spread across multiple devices and facilities, and cheap.

o. Files can be from 0 bytes to 5 TB.

o. There is unlimited storage - AWS monitor the use across regions globally.

o. Files are stored in Buckets. Bucket is just a folder with unique name. [Name should be all in lower case]

o. S3 is a universal namespace, that is, names must be unique globally.

o. When you upload a file to S3, you will receive a HTTP 200 code if the upload was successful.

o. Data Consistency Model for S3 [Critical]

=> Read after write consistency for PUTS of new objects.

=> Eventual consistency for overwrite PUTS and DELETES (can take some time to propagate).

=> There is no fee for copying data from EC2 to S3, if they are in the same region.

=> Basically, when you upload a object such as a PDF file, you should be able to read immeidately.

However, when you updating the file, you can not have that much fast speed. It may take some time [million-second] for propagate.

Because, it might be stored across multiple devices like hard disk. Meanwhile a file is being updated, it gets read.

The data either be new version or old version of data. You will not read partial of the data, or corrupted data, like messive meaningless code.

o. S3 is high availability and fault tolerance. No need to worry if the AZ or Region down.

o. URL of S3 bucket .................................. https://s3-eu-west-1.amazonaws.com/emeralit

o. URL of S3 bucket holding statistic website ........ https://S3_Bucket_Name.s3-website-ap-us-east-1.amazonaws.com

o. Using Route53 to host a web site hosted in S3, the bucket name must be same as domain name.

o. The minium file size that you can upload to S3 is 0 bytes.

o. Versioing of S3 must be enabled for cross region replication.

o. key - the name of the object

o. value - the data is made of a sequence of bytes.

o. version id - important for versioning

o. metadata - data about the data you are storing, such last uploaded/modified time

o. versioning

o. metadata

o. subresources - access control list(object permission), torrent

o. Built for 99.99% availability for the S3 platform

o. Amazon guarantee 99.99% availability

o. Amazon ensures 99.99999999999% durabilit for S3

o. Tired storage avaiable

o. Lifecycle management - deletion retention

o. versioning

o. encrytion

o. Secure your data using access control lists and bucket policy

o. Storage Ties/Classes

o. S3 99.99% availability, 99.99999999999% durability, stored redundantly across multiple devices/facilities and is designed to sustain the loss of 2 facilities concurrently.

o. S3 - IA(Infrequently accessed) for data that is accessed less frequently, but requires rapid access when needed. Lower fee than S3, but you are charged a retrieval fee.

o. Reduced redundancy storage - design to provide 99.99% durability and availability of objects over a given year.

o. Glacier - very cheap, but used for archival only. It takes 3-5 hours to restore from Glacier.

o. Glacier is an extremely low-cost storage services for data archival. It stores data for as little as $0.01 per GB per month, and is optimized for data

that is infrequently accessed and for which retrival times of 3 to 5 hours are suitable.

o. S3 Charges - by storage/request time/Storage Management Pricing/Data transfer pricing(replication from one region to region)/Transfer acceleration/

o. Amazon S3 transfer acceleration enables fast, easy, and secure transfers of files over long distances between your end users and an S3 bucket.

Transfer acceleration takes advantage of Amazon CloudFront's globally distributed edge location [LG is a data zone very close to end user].

As the data arrives at an edge location, data is routed to Amazon S3 over an optimized network path.

o. Create S3 bucket: Go to --> Properties --> Storage Management --> Switch between "New Console" and "Old Console".

o. properties:

|

|__ Versioning: can avoid user deleting object files accidently.

|

|__ Logging [Auditing user accessing for bucket]: Cloudtrail will audit all services access which is thought as overwhelm.

|

|__ Statistic wesite hosting [HTML only, Not for PHP]

| |__ o. Scalable

| |__ o. Cheap

|

|__ Tag

|

|__ Cross region replication

|

|__ Events: Exa. notifying when phote uploaded by user

|

|__ Lifecycle: S3/S3-IA/S3 Reduce Reduntency

|

|__ Permission: Every S3 bucket gets created, it will be priviate by default.

|

|__ Management: Metric with numbers of request, storage usage, report of inventory.

o.Versioning of S3 bucket:

==> Versioning could only be disabled, not deleted.

==> Delete one version of object, you can not restore them via new concole.

==> When click on the link, it will point to the latest version --> restore from old concole via "Deleted Marker".

==> When deleted one object, it will be marked with "deleted", but still be able to restore via old concole.

Sort of like removing a file to recycle bin first before deleting permently.

o. Stores all versions of all object, including all writes and even if you deleted the object.

o. Used for backup purpose as well.

o. Once enabled versioning feature, it can not be disabled. Only suspended.

o. Integrates with lifescyel rules. Such as move to Glacier after retention policy, or delete them.

o. Versioing MFA deletion capability. If you want to delete one file, it will required multifactor authentication to improve security.

o. S3 - Cross Region Replication:

==> Versioning must be enabled on both the source and destination buckets.

==> Regions must be unique. Can not replicate between buckets who are in the same region.

==> Files in an existing bucket are not replicated automatically. All subsequent updated files will be replicated automatically.

==> You cannot replicate to multiple buckets or use daisy chaining(at this time)

==> Deleting markers are replicated.

==> Deleting individual versions or delete markers will not be replicated.

==> Understand what cross region replication is at a high level would be fine.

==> Prefix is just the folder under your bucket.

o. S3/Glacier - Life cycle management:

==> Can be used in conjunction with versioning.

==> Can be applied to current versions and previous versions.

==> Following actions can now be done:

1) Transition to the standard - infrequent access storage class (128KB and 30 days after the creation date).

2) Archive to the Glacier storage class (30 days after IA, if relevant)

3) Permanently delete.

==> Glacier archives max size is 40 TB; unlimited numbers; Uploading is synchronised; Support Upload/Download/Delete;

As long as archive uploaded, it would be immutable [unchangable].

Glacier and AWS Storage Gateway are encrypting natively.

o. Securing your buckets:

==> Be default, all newly created buckets are PRIVATE.

==> You can setup access control to your bucket using:

<1> Bucket policies.

<2> Access Control lists - individual permision.

==> S3 buckets can be configured to create access logs which log all requests made to the S3 bucket. This can be done to another bucket.

==> Encrytion:

<1> In Transit - SSL/TLS [HTTPS]

<2> At rest:

>> Server side encrytion:

1) S3 managed keys-SSE-S3.

2) AWS Key Management Service, SSE-KMS.

3) Server Side Encryotion with Customer Provided Keys - SSE-C.

>> Client Side Encrytion: You encrypted and uploaded to S3.

<5> Storage - CloudFront - A content delivery network(CDN) is a system of distributed servers(network) that deliver webpages and other web content to a user based on the geographic

locations of the user, the origin of the webpage and a content delivery server.

o. Key Terminology: Edge Location - This is the location where content will be cached.

o. CDN accelerate URL format will be https://bucket_name.s3-accelerate.amazonaws.com

o. This is seperate to an AWS Region/AZ.

o. Origin - This is the origin of all the files that the CDN will distribute. This can be either an S3 bucket, an EC2 instance, and Elastic load balancer or Route53.

o. Distribution - This is the name given the CDN which consists of a collection of Edge locations.

o. CloudFront can be used to deliver your entire website, including dynamic, static, streaming, and interactive content using a global network of edge locations.

Requests for your content are automatically routed to the nearest edge location, so content is deliverd with the best possible performance.

o. Cloudfront is optimized to work with other services, such as S3, EC2, ELB, and Route 53.

Also works seamlessly with any non-AWS origin server, which stores the original, definitive versions of your files.

o. web distributtion - Typically used for webiste

o. RTMP - Used for media streaming - Adobe Flash

o. Web distribution/RTMP distribution

o. Edge Location - are not just READ only, you can write to them too.

o. Objects are cached for the life of the TTL[Time to Live - How long it will be cached]

o. You can clear cached objects, but you will be charged. [Default TTL is 24 days. If you want to delete them, such as update a new version of the video. You will be charged.]

o. CloudFront not only for download, but also be available for upload.

<6> Storage - Storage Gateway - a service that connects an on-permises software appliance with cloud-based storage to provide seamleass and secure integration

between an organizations's on premises IT environment and AWS's storage infrastucture.

The service enables you to securely store data to the AWS cloud for scalable and cost-effective storage.

o. AWS storage gateway's software appliance is available for download as a virtual machine image that you install on a host in your data center.

SG supports either VMare ESXi or Micoresoft Hyper-V. Once you are installed your gateway and associated it with your AWS account through the activation process,

you can use the AWS management console to create the storage gateway option that is right for you.

o. Four types of Storage Gateway:

>> File Gateway (NFS) - Flat file - .pdf/video/.word ===> S3 - brand new.

Most recent used data is cached on the gateway for low-latency access, and data transfer between your data center and AWS is fully managed and optimized by the gateway.

>> Volumes Gateway (iSCSI) - Block based storage, OS/Virtual hard disk/SQL Server, not flat file

a) Stored mode: primary data is stored locally and your entire dataset is available for low-latency access while asynchronously backed up to AWS. [Google Drive]

b) Cached mode: primary data is written to S3, while retaining your frequently accessed data locally in a cache for low-latency access.

>> Tape Gateway (VTL)

o. File Gateway:

>> Files are stored as objects in your S3 buckets, accessed through a network File system mount point.

>> Ownership, permissions, and timestamps are durably stored in S3 in the user meta-data of the object associated with the file.

>> Once objects are transferred to s3, they can be managed as native s3 objects, and the bucket policies such as versioning, lifecycle management,

and cross-region replication apply directly to objects stored in your bucket.

o. Volum Gateway [Virtual hard disk]:

>> The volumn interface presents your applications with disk volumes using the iSCSI block protocal.

>> Data written to these volumes can be asynchronously backed up as point-in-time snapshots of your volumes, and stored in the cloud as Amazon EBS snapshots.

>> Snapshots are incremental backups that capture only changed blockes. All snapshot storage is alse compressed to minimize your storage charges.

o. Tape Gateway:

>> It offers a durable, cost-effective solution to archive your data in the AWS cloud.

>> The VTL interface it provides lets you leverage your existing tape-based backup application infrastructure to store data on virtual tape cartidges

that you create on your tape gateway.

>> Each tape gateway is pre-configured with a media changer and tape drives, which are available to your existing client backup applications as iSCSI devices.

>> You add tape cartridges as you need to archive your data.

>> Supported by NetBackup, Backup Exec, Veam etc.

o. Exam Tips:

>> File Gateway - For flat files, stored directly on S3.

>> vloume Gateway:

a) Store volumes - entire dataset is stored on site and is asynchronously backed up to S3.

b) Cached volumes - Entire dataset is stored on S3 and the most frequently accessed data is cached on site.

>> Gateway Virtual: tape Libray(VTL) - Used for backup and uses popular backup applications like NetBackup, Backup Exec, Veam etc.

<7> Storage - Snowball - Previously, AWS import/export disk accelerates moving large amounts of data into and out of the AWS cloud using portable storage devices for transport.

AWS import/export disk transfers your data directly onto and off of storage devices using Amazon's high-speed internal network and bypassing the internet.

But, the problem is that every client sends over different devices to manage. That is the reason why introduce snowball.

Snowball is a AWS produce physical hardware like a suitcase.

o. Snowball type:

a) Standard Snowball.

b) Snowball Edge.

c) Snowmobile.

o. Exam Tips - Snowball:

>> Understand what snowball is

>> Understand what import export is

>> Snowball Can

>> Import to S3

>> Export from S3

<8> EC2:

o. Amazon Elastic Computed Cloud (Amazon EC2) is a web service that provides resizable compute capacity in the cloud.

Amazon EC2 reduces in the time required to obtain and boot new server instances to minutes, allowing you to quickly scale capacity both up and down,

as you computing requirements change.

o. Amazon EC2 changes the economics of computing by allowing you to pay only for capacity that you actually use.

Amazon EC2 provides developers the tools to build failure resilient applications and isolate themselves from common failure scenarios.

o. EC2 Type by price:

>> On Demand ... Allow you to pay a fixed rate by the hour with no commitment. Suit for startup company.

>> Reserved .... Provide you with a capacity reservation, and offer a significant discount on the hourly charge for an instance. 1 Year or 3 Year Terms.

>> Spot ........ Enable you to bid whatever price you want for instance capacity, providing for even greater savings if your applications have flexible start and end times.

>> Dedicated ... Physical EC2 server dedicated for your use. Dedicated Hosts can help you reduce costs by allowing you to use your existing server-bound software licenses.

o. EC2 - On demand:

>> Users that want the low cost and flexibility of Amazon EC2 without any up-front payment or long-term commitment.

>> Applications with short term, spiky, or unpredictable workloads that cannot be interrupted.

>> Applications being developed or tested on Amazon EC2 for the first time, such as startup company.

o. EC2 - Reserved:

>> Such as reserving 2 servers for Annual black Friday.

>> Applications with steady state or predictable usage.

>> Applications that require reserved capacity.

>> Users able to make upfront payments to reduce their total computing costs even further.

>> Reserved instane can be migrated across the availability zones, sepecific to one instance type, and lower Total Cost of Ownship of a system.

>> Reserved instance is for long term, and predictable CPU/memory/IO performance.

o. EC2 - Spot:

>> Sudden requirement for large urgent data computing:

>> Spot price is your expected price, and there is an AWS resource flowing price.

If your expected price match the flowing price, then your EC2 instance will be created, otherwise it will be terminated.

>> Use Case:

a) Applications that have flexible start and end times.

b) Applications that are only feasible at very low compute prices.

c) Users with urgent computing needs for large amounts of additional capacity.

>> If the Spot instance is terminated by Amazon EC2, you will not be charged for that partial hour of usage.

However, if you terminate the instance yourself, you will be charged for any hour in which the instance ran.

o. EC2 - Dedicated:

>> For example, goverment purpose.

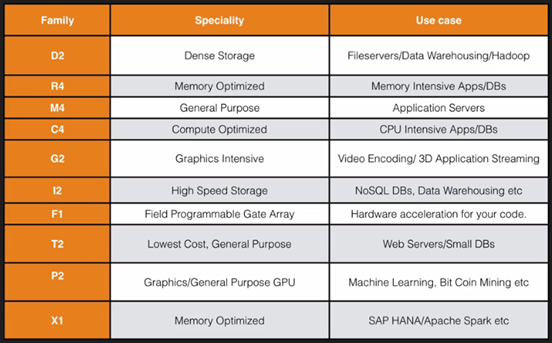

o. EC2 Type - DR MC GIFT PX:

>> D - Density

>> R - RAM

>> M - main choice for general purpose apps

>> C - Compute

>> G - Graphics

>> I - lOPS

>> F - FPGA

>> T - cheap general purpose (think T2 Micro)

>> P - Graphics (think Pics)

>> X - Extreme Memory

<3> Identity Access Management[IAM] - allow you to manage users and their level of access to the AWS console:

o. IAM is global/universal. It does not apply any particular region.

o. Customize AWS console URL on webpage.

o. Root user account is the one you created with Email.

o. New user has no permission.

o. Access KEY ID & Seceret Access Key are only for API, not for console login.

o. Access KEY ID & Seceret Access Key are one-time generated. New one must be recreated.

o. Always setup Multi-Factor Authentication on your root account. [ Google authenticator ]

o. Admin can customize your own password policy. Exa, length, up/lower case, numeric.

o. Power user - can access to any AWS services except the management of groups and users within IAM.

o. centralised control of your AWS account.

o. shared access to your AWS account.

o. Granular permission.

o. identity federation (including active directory, facebook, linkedin etc).

o. multifactor authentication.

o. provide temporary access for users/devices and services where necessary.

o. allows you to set up your own password rotation policy.

o. integrates with many different AWS services.

o. support PCI DSS Compliance.

o. users.

o. groups - a collection of users under one set of permissions.

o. roles - you create roles and can assign them to AWS resources.

o. policies - a document that defines one or more permission. Such as Oracle user profile.

o. region - only global.

o. console DNS - customize.

o. activate (multiple factor authertication)MFA on your root account - root account is the email that you sign up/in.

o. Enable MFA - Google authanticator.

o. IAM user console URL - https://91932334972.signin.aws.amazon.com/console

<4> Storage - Simple Storage Service[S3] - provides secure, durable, highly-scalable object storage:

o. Object based storage only, such video, flat files, pdf, word, image.

o. The data is spread across multiple devices and facilities, and cheap.

o. Files can be from 0 bytes to 5 TB.

o. There is unlimited storage - AWS monitor the use across regions globally.

o. Files are stored in Buckets. Bucket is just a folder with unique name. [Name should be all in lower case]

o. S3 is a universal namespace, that is, names must be unique globally.

o. When you upload a file to S3, you will receive a HTTP 200 code if the upload was successful.

o. Data Consistency Model for S3 [Critical]

=> Read after write consistency for PUTS of new objects.

=> Eventual consistency for overwrite PUTS and DELETES (can take some time to propagate).

=> There is no fee for copying data from EC2 to S3, if they are in the same region.

=> Basically, when you upload a object such as a PDF file, you should be able to read immeidately.

However, when you updating the file, you can not have that much fast speed. It may take some time [million-second] for propagate.

Because, it might be stored across multiple devices like hard disk. Meanwhile a file is being updated, it gets read.

The data either be new version or old version of data. You will not read partial of the data, or corrupted data, like messive meaningless code.

o. S3 is high availability and fault tolerance. No need to worry if the AZ or Region down.

o. URL of S3 bucket .................................. https://s3-eu-west-1.amazonaws.com/emeralit

o. URL of S3 bucket holding statistic website ........ https://S3_Bucket_Name.s3-website-ap-us-east-1.amazonaws.com

o. Using Route53 to host a web site hosted in S3, the bucket name must be same as domain name.

o. The minium file size that you can upload to S3 is 0 bytes.

o. Versioing of S3 must be enabled for cross region replication.

o. key - the name of the object

o. value - the data is made of a sequence of bytes.

o. version id - important for versioning

o. metadata - data about the data you are storing, such last uploaded/modified time

o. versioning

o. metadata

o. subresources - access control list(object permission), torrent

o. Built for 99.99% availability for the S3 platform

o. Amazon guarantee 99.99% availability

o. Amazon ensures 99.99999999999% durabilit for S3

o. Tired storage avaiable

o. Lifecycle management - deletion retention

o. versioning

o. encrytion

o. Secure your data using access control lists and bucket policy

o. Storage Ties/Classes

o. S3 99.99% availability, 99.99999999999% durability, stored redundantly across multiple devices/facilities and is designed to sustain the loss of 2 facilities concurrently.

o. S3 - IA(Infrequently accessed) for data that is accessed less frequently, but requires rapid access when needed. Lower fee than S3, but you are charged a retrieval fee.

o. Reduced redundancy storage - design to provide 99.99% durability and availability of objects over a given year.

o. Glacier - very cheap, but used for archival only. It takes 3-5 hours to restore from Glacier.

o. Glacier is an extremely low-cost storage services for data archival. It stores data for as little as $0.01 per GB per month, and is optimized for data

that is infrequently accessed and for which retrival times of 3 to 5 hours are suitable.

o. S3 Charges - by storage/request time/Storage Management Pricing/Data transfer pricing(replication from one region to region)/Transfer acceleration/

o. Amazon S3 transfer acceleration enables fast, easy, and secure transfers of files over long distances between your end users and an S3 bucket.

Transfer acceleration takes advantage of Amazon CloudFront's globally distributed edge location [LG is a data zone very close to end user].

As the data arrives at an edge location, data is routed to Amazon S3 over an optimized network path.

o. Create S3 bucket: Go to --> Properties --> Storage Management --> Switch between "New Console" and "Old Console".

o. properties:

|

|__ Versioning: can avoid user deleting object files accidently.

|

|__ Logging [Auditing user accessing for bucket]: Cloudtrail will audit all services access which is thought as overwhelm.

|

|__ Statistic wesite hosting [HTML only, Not for PHP]

| |__ o. Scalable

| |__ o. Cheap

|

|__ Tag

|

|__ Cross region replication

|

|__ Events: Exa. notifying when phote uploaded by user

|

|__ Lifecycle: S3/S3-IA/S3 Reduce Reduntency

|

|__ Permission: Every S3 bucket gets created, it will be priviate by default.

|

|__ Management: Metric with numbers of request, storage usage, report of inventory.

o.Versioning of S3 bucket:

==> Versioning could only be disabled, not deleted.

==> Delete one version of object, you can not restore them via new concole.

==> When click on the link, it will point to the latest version --> restore from old concole via "Deleted Marker".

==> When deleted one object, it will be marked with "deleted", but still be able to restore via old concole.

Sort of like removing a file to recycle bin first before deleting permently.

o. Stores all versions of all object, including all writes and even if you deleted the object.

o. Used for backup purpose as well.

o. Once enabled versioning feature, it can not be disabled. Only suspended.

o. Integrates with lifescyel rules. Such as move to Glacier after retention policy, or delete them.

o. Versioing MFA deletion capability. If you want to delete one file, it will required multifactor authentication to improve security.

o. S3 - Cross Region Replication:

==> Versioning must be enabled on both the source and destination buckets.

==> Regions must be unique. Can not replicate between buckets who are in the same region.

==> Files in an existing bucket are not replicated automatically. All subsequent updated files will be replicated automatically.

==> You cannot replicate to multiple buckets or use daisy chaining(at this time)

==> Deleting markers are replicated.

==> Deleting individual versions or delete markers will not be replicated.

==> Understand what cross region replication is at a high level would be fine.

==> Prefix is just the folder under your bucket.

o. S3/Glacier - Life cycle management:

==> Can be used in conjunction with versioning.

==> Can be applied to current versions and previous versions.

==> Following actions can now be done:

1) Transition to the standard - infrequent access storage class (128KB and 30 days after the creation date).

2) Archive to the Glacier storage class (30 days after IA, if relevant)

3) Permanently delete.

==> Glacier archives max size is 40 TB; unlimited numbers; Uploading is synchronised; Support Upload/Download/Delete;

As long as archive uploaded, it would be immutable [unchangable].

Glacier and AWS Storage Gateway are encrypting natively.

o. Securing your buckets:

==> Be default, all newly created buckets are PRIVATE.

==> You can setup access control to your bucket using:

<1> Bucket policies.

<2> Access Control lists - individual permision.

==> S3 buckets can be configured to create access logs which log all requests made to the S3 bucket. This can be done to another bucket.

==> Encrytion:

<1> In Transit - SSL/TLS [HTTPS]

<2> At rest:

>> Server side encrytion:

1) S3 managed keys-SSE-S3.

2) AWS Key Management Service, SSE-KMS.

3) Server Side Encryotion with Customer Provided Keys - SSE-C.

>> Client Side Encrytion: You encrypted and uploaded to S3.

<5> Storage - CloudFront - A content delivery network(CDN) is a system of distributed servers(network) that deliver webpages and other web content to a user based on the geographic

locations of the user, the origin of the webpage and a content delivery server.

o. Key Terminology: Edge Location - This is the location where content will be cached.

o. CDN accelerate URL format will be https://bucket_name.s3-accelerate.amazonaws.com

o. This is seperate to an AWS Region/AZ.

o. Origin - This is the origin of all the files that the CDN will distribute. This can be either an S3 bucket, an EC2 instance, and Elastic load balancer or Route53.

o. Distribution - This is the name given the CDN which consists of a collection of Edge locations.

o. CloudFront can be used to deliver your entire website, including dynamic, static, streaming, and interactive content using a global network of edge locations.

Requests for your content are automatically routed to the nearest edge location, so content is deliverd with the best possible performance.

o. Cloudfront is optimized to work with other services, such as S3, EC2, ELB, and Route 53.

Also works seamlessly with any non-AWS origin server, which stores the original, definitive versions of your files.

o. web distributtion - Typically used for webiste

o. RTMP - Used for media streaming - Adobe Flash

o. Web distribution/RTMP distribution

o. Edge Location - are not just READ only, you can write to them too.

o. Objects are cached for the life of the TTL[Time to Live - How long it will be cached]

o. You can clear cached objects, but you will be charged. [Default TTL is 24 days. If you want to delete them, such as update a new version of the video. You will be charged.]

o. CloudFront not only for download, but also be available for upload.

<6> Storage - Storage Gateway - a service that connects an on-permises software appliance with cloud-based storage to provide seamleass and secure integration

between an organizations's on premises IT environment and AWS's storage infrastucture.

The service enables you to securely store data to the AWS cloud for scalable and cost-effective storage.

o. AWS storage gateway's software appliance is available for download as a virtual machine image that you install on a host in your data center.

SG supports either VMare ESXi or Micoresoft Hyper-V. Once you are installed your gateway and associated it with your AWS account through the activation process,

you can use the AWS management console to create the storage gateway option that is right for you.

o. Four types of Storage Gateway:

>> File Gateway (NFS) - Flat file - .pdf/video/.word ===> S3 - brand new.

Most recent used data is cached on the gateway for low-latency access, and data transfer between your data center and AWS is fully managed and optimized by the gateway.

>> Volumes Gateway (iSCSI) - Block based storage, OS/Virtual hard disk/SQL Server, not flat file

a) Stored mode: primary data is stored locally and your entire dataset is available for low-latency access while asynchronously backed up to AWS. [Google Drive]

b) Cached mode: primary data is written to S3, while retaining your frequently accessed data locally in a cache for low-latency access.

>> Tape Gateway (VTL)

o. File Gateway:

>> Files are stored as objects in your S3 buckets, accessed through a network File system mount point.

>> Ownership, permissions, and timestamps are durably stored in S3 in the user meta-data of the object associated with the file.

>> Once objects are transferred to s3, they can be managed as native s3 objects, and the bucket policies such as versioning, lifecycle management,

and cross-region replication apply directly to objects stored in your bucket.

o. Volum Gateway [Virtual hard disk]:

>> The volumn interface presents your applications with disk volumes using the iSCSI block protocal.

>> Data written to these volumes can be asynchronously backed up as point-in-time snapshots of your volumes, and stored in the cloud as Amazon EBS snapshots.

>> Snapshots are incremental backups that capture only changed blockes. All snapshot storage is alse compressed to minimize your storage charges.

o. Tape Gateway:

>> It offers a durable, cost-effective solution to archive your data in the AWS cloud.

>> The VTL interface it provides lets you leverage your existing tape-based backup application infrastructure to store data on virtual tape cartidges

that you create on your tape gateway.

>> Each tape gateway is pre-configured with a media changer and tape drives, which are available to your existing client backup applications as iSCSI devices.

>> You add tape cartridges as you need to archive your data.

>> Supported by NetBackup, Backup Exec, Veam etc.

o. Exam Tips:

>> File Gateway - For flat files, stored directly on S3.

>> vloume Gateway:

a) Store volumes - entire dataset is stored on site and is asynchronously backed up to S3.

b) Cached volumes - Entire dataset is stored on S3 and the most frequently accessed data is cached on site.

>> Gateway Virtual: tape Libray(VTL) - Used for backup and uses popular backup applications like NetBackup, Backup Exec, Veam etc.

<7> Storage - Snowball - Previously, AWS import/export disk accelerates moving large amounts of data into and out of the AWS cloud using portable storage devices for transport.

AWS import/export disk transfers your data directly onto and off of storage devices using Amazon's high-speed internal network and bypassing the internet.

But, the problem is that every client sends over different devices to manage. That is the reason why introduce snowball.

Snowball is a AWS produce physical hardware like a suitcase.

o. Snowball type:

a) Standard Snowball.

b) Snowball Edge.

c) Snowmobile.

o. Exam Tips - Snowball:

>> Understand what snowball is

>> Understand what import export is

>> Snowball Can

>> Import to S3

>> Export from S3

<8> EC2:

o. Amazon Elastic Computed Cloud (Amazon EC2) is a web service that provides resizable compute capacity in the cloud.

Amazon EC2 reduces in the time required to obtain and boot new server instances to minutes, allowing you to quickly scale capacity both up and down,

as you computing requirements change.

o. Amazon EC2 changes the economics of computing by allowing you to pay only for capacity that you actually use.

Amazon EC2 provides developers the tools to build failure resilient applications and isolate themselves from common failure scenarios.

o. EC2 Type by price:

>> On Demand ... Allow you to pay a fixed rate by the hour with no commitment. Suit for startup company.

>> Reserved .... Provide you with a capacity reservation, and offer a significant discount on the hourly charge for an instance. 1 Year or 3 Year Terms.

>> Spot ........ Enable you to bid whatever price you want for instance capacity, providing for even greater savings if your applications have flexible start and end times.

>> Dedicated ... Physical EC2 server dedicated for your use. Dedicated Hosts can help you reduce costs by allowing you to use your existing server-bound software licenses.

o. EC2 - On demand:

>> Users that want the low cost and flexibility of Amazon EC2 without any up-front payment or long-term commitment.

>> Applications with short term, spiky, or unpredictable workloads that cannot be interrupted.

>> Applications being developed or tested on Amazon EC2 for the first time, such as startup company.

o. EC2 - Reserved:

>> Such as reserving 2 servers for Annual black Friday.

>> Applications with steady state or predictable usage.

>> Applications that require reserved capacity.

>> Users able to make upfront payments to reduce their total computing costs even further.

>> Reserved instane can be migrated across the availability zones, sepecific to one instance type, and lower Total Cost of Ownship of a system.

>> Reserved instance is for long term, and predictable CPU/memory/IO performance.

o. EC2 - Spot:

>> Sudden requirement for large urgent data computing:

>> Spot price is your expected price, and there is an AWS resource flowing price.

If your expected price match the flowing price, then your EC2 instance will be created, otherwise it will be terminated.

>> Use Case:

a) Applications that have flexible start and end times.

b) Applications that are only feasible at very low compute prices.

c) Users with urgent computing needs for large amounts of additional capacity.

>> If the Spot instance is terminated by Amazon EC2, you will not be charged for that partial hour of usage.

However, if you terminate the instance yourself, you will be charged for any hour in which the instance ran.

o. EC2 - Dedicated:

>> For example, goverment purpose.

o. EC2 Type - DR MC GIFT PX:

>> D - Density

>> R - RAM

>> M - main choice for general purpose apps

>> C - Compute

>> G - Graphics

>> I - lOPS

>> F - FPGA

>> T - cheap general purpose (think T2 Micro)

>> P - Graphics (think Pics)

>> X - Extreme Memory

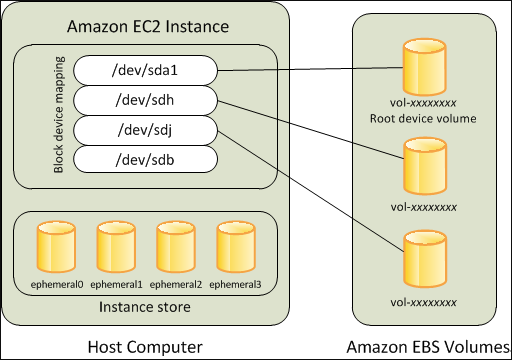

<9> EBS Volume - block based storage:

o. Amazon EBS allows you to create storage volumes and attach them to Amazon EC2 instances.

Once attached, you can create a file system on top of these volumes, run a database, or use them in any other way you would use a block device.

Amazon EBS volumes are placed in a specific Availability Zone, where they are automatically replicated to protect you from the failure of a single component.

All EBS are cross multiple facilities, but only in single AZ. If the AZ falled, the vloume can not be fault tolence.

o. EBS Type:

>> General Purpose SSD (G P2) - General purpose, balances both price and performance:

a) Ratio of 3 lOPS per GB with up to 10,000 lOPS and the ability to burst up to 3000 lOPS for extended perionds of time for volumes under lGib.

>> Provisioned IOPS SSD (lOi) - Designed for I/O intensive applications such as large relational or NoSQL databases:

a) Use if you need more than 10,000 lOPS.

b) Can provision up to 20,000 lOPS per volume.

c) 16 TiB as maximum size. Caution: it is TiB [bits, base-2], instead of TB [byte, base-10].

>> Throughput Optimized HDD (ST1):

a) Big data.

b) Data warehouse.

c) Log processing - sequential read/write.

d) Can NOT be a boot volume.

>> Cold HDD (SC1):

a) Lowest Cost Storage for infrequently accessed workloads.

b) File Server

c) Can NOT be a boot volume

>> Magnetic (Standard):

a) Lowest cost per gigabyte of all EBS volume types that is bootable.

b) Magnetic volumes are ideal for workloads where data is accessed infrequently, and applications where the lowest storage cost is important.

o. EB2/EBS Operation:

a) Launch an EC2 Instance.

b) Security Group Basics.

c) Volumes and Snapshots.

d) Create an AMI.

e) Load Balancers & Health Checks.

f) Cloud Watch.

g) AWS Command Line

h) IAM Roles with EC2

i) Bootstrap Scripts

j) Launch Configuration Groups

k) Autoscaling 101

l) EFS

m) Lambda

n) HPS (High Performance Compute) & Placement Groups

o. EC2 Exam Tips:

>> Know the difference between:

a) On Demand

b) Spot

c) Reserved

d) Dedicated

>> Remember with spot instance:

a) If you terminate the instance, you pay for the hour.

b) If AWS terminates the spot instance, you get the hour it was terminated in for free.

o. EBS Exam Tips:

>> EBS Volum Types:

a) SSD, General Purporse - GP2 - (Up to 10,000 lOPS)

b) SSD, Provisioned lOPS - 101 - (More than 10,000 lOPS)

c) HDD, Throughput Optimized - ST1 - frequently accessed workloads

d) HDD, Cold - SC1 - less frequently accessed data.

e) HDD, Magnetic - Standard - cheap, infrequently accessed storage

>> You cannot mount 1 EBS volume to multiple EC2 instances, instead use EFS [This File System could be shared].

>> Encrypttion supportes on all types of EBS volumes.

>> Snapshot of encrypted EBS are automatically encrypted.

>> Encryotion does not support for all instance types.

>> Existing EBS volume can not be encrypted.

>> Shared EBS volume can not be encryted.

>> Root EBS volumes cannot be encrypted, except using third party tool.

>> The EBS volume size, type, and IOPS can be changed when it is attached on an EC2 instance.

o. EC2 Family:

>> DR MC GIFT PX might be missing in some of the regions. Not all regions have all types of EC2 instance.

o. When setup EC2 instance, one subnet can not go across multiple availability zone.

o. When setup EC2 instance, add tag on an instance can help you check the where the bill is from which server.

o. When setup EC2 instance, in security group, you can indicate server access network protocal and associated port.

"My IP" means, if your server's IP matches this, you can login directly. The default value is "Anywhere".

o. T2/C4 only can be backed by EC2. C3/M3 are avaiable for SSD backed storage.

o. $ yum install httpd -y [install Appache Server]

$ yum update -u

$ service httpd start

$ /var/www/html is the default gateway folder, like /home/amos/public_html

$ ssh ec2-user@192.168.52.91 -i /home/amos/ssh_key_pair.pem

o. EC2 instance monitoring interval 5 minutes is free, but switch to 1 minute will cost bit more.

o. Exam Tips:

>> Termination Protection is turned off by default, you have to turn it on by yourself.

>> On an EBS-backed instance, the default action for the root EBS volume is to be deleted when the instance is terminated.

>> EBS Root Volumes of your DEFAULT AMI’s cannot be encrypted. But, You can also use a third party tool (such as bit locker etc) to encrypt the root volume,

or this can be done when creating AMI's in the AWS console or using the API.

>> Snapshot of data and root volume can be encrypted and attached to an AMI.

>> EBS encryption support boot volume data being copied to an EBS snapshot with encryption option.

>> Additional volumes can be encrypted. [The volumn that you created can be encrypted]

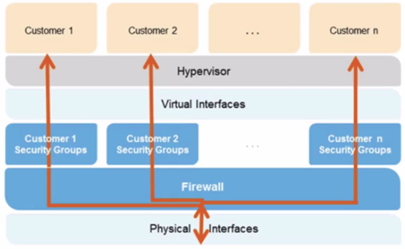

o. Security Group - server virtual firewall

>> When creating EC2 instance, in security group tab, you can choose:

a) SSH, Port 22, Source: 0.0.0.0/0 ==> IP/Mask means that all the IP can access to this server.

>> After EC2 instance up and installed Apache, create one HTML page. If you delete HTTP from security group, the webpage will be fobbiden to review immeidately.

a) Any changes that you applied on security group will be in effective immeidately.

>> All Inbound Traffic is Blocked By Default.

>> All Outbound Traffic is Allowed.

>> Changes to Security Groups take effect immediately.

>> You can have any number of EC2 instances within a security group.

>> You can have multiple security groups attached to EC2 Instances

>> Security Groups are STATEFUL.

a) If you create an inbound rule allowing traffic in, that traffic is automatically allowed back out again.

>> You cannot block specific IP addresses using Security Groups,instead use Network Access Control Lists.

>> You can specify allow rules, but not deny rules.

o. Steps of upgrade volume type:

>> AWS console ==> EC2 ==> Volumn ==> Action "Deatach" the target volumn [This action will garantee your file is consistency, even wait until disk write done]

==> make a snapshot of the volumn ==> Restore the snapshot on a upper level storage, such as "Provisioned IO" disk ==> Attach the volumn ==> Mount File System again.

o. AWS EBS volume usaually goes with RAID or 10, rarely chose 5.

o. To increase disk IO, you can create a RAID array by multiple AWS EBS individual volumes.

o. Problem - Take a snapshot, the snapshot excludes data held in the cache by applications and the OS.

This tends not to matter on a single volume, however using multiple volumes in a RAID array, this can be a problem due to interdependencies of the array.

>> So, how to make a snapshot of a disk array:

a) Stop the application from writing to disk.

b) Flush all caches to the disk.

c) How to archive above 2 pionts?

==> Freeze the file system.

==> Unmount the RAID Array.

==> Shutting down the associated EC2 instance.

o. To create a snapshot for Amazon EBS volumes that serve as root devices, you should stop the instance before taking the snapshot.

o. Snapshots of encrypted volumes are encrypted automatically.Volumes restored from encrypted snapshots are encrypted automatically.

o. You can share snapshots, but only if they are unencrypted. These snapshots can be shared with other AWS accounts or made public.

o. When you snapshot the boot volume, you must make a AMI image first, and then be able to launch EC2 instance afterwards.

o. AMI Type [based on root device]:

>> EBS backed volume ...................... It is a product of AWS created from an EBS volume.

>> Instance store backed volume ........... These images could be created by any third party amateur [ AWS console => Launch EC2 => Go to "Community AMI" to choose AMI ]

<9> EBS Volume - block based storage:

o. Amazon EBS allows you to create storage volumes and attach them to Amazon EC2 instances.

Once attached, you can create a file system on top of these volumes, run a database, or use them in any other way you would use a block device.

Amazon EBS volumes are placed in a specific Availability Zone, where they are automatically replicated to protect you from the failure of a single component.

All EBS are cross multiple facilities, but only in single AZ. If the AZ falled, the vloume can not be fault tolence.

o. EBS Type:

>> General Purpose SSD (G P2) - General purpose, balances both price and performance:

a) Ratio of 3 lOPS per GB with up to 10,000 lOPS and the ability to burst up to 3000 lOPS for extended perionds of time for volumes under lGib.

>> Provisioned IOPS SSD (lOi) - Designed for I/O intensive applications such as large relational or NoSQL databases:

a) Use if you need more than 10,000 lOPS.

b) Can provision up to 20,000 lOPS per volume.

c) 16 TiB as maximum size. Caution: it is TiB [bits, base-2], instead of TB [byte, base-10].

>> Throughput Optimized HDD (ST1):

a) Big data.

b) Data warehouse.

c) Log processing - sequential read/write.

d) Can NOT be a boot volume.

>> Cold HDD (SC1):

a) Lowest Cost Storage for infrequently accessed workloads.

b) File Server

c) Can NOT be a boot volume

>> Magnetic (Standard):

a) Lowest cost per gigabyte of all EBS volume types that is bootable.

b) Magnetic volumes are ideal for workloads where data is accessed infrequently, and applications where the lowest storage cost is important.

o. EB2/EBS Operation:

a) Launch an EC2 Instance.

b) Security Group Basics.

c) Volumes and Snapshots.

d) Create an AMI.

e) Load Balancers & Health Checks.

f) Cloud Watch.

g) AWS Command Line

h) IAM Roles with EC2

i) Bootstrap Scripts

j) Launch Configuration Groups

k) Autoscaling 101

l) EFS

m) Lambda

n) HPS (High Performance Compute) & Placement Groups

o. EC2 Exam Tips:

>> Know the difference between:

a) On Demand

b) Spot

c) Reserved

d) Dedicated

>> Remember with spot instance:

a) If you terminate the instance, you pay for the hour.

b) If AWS terminates the spot instance, you get the hour it was terminated in for free.

o. EBS Exam Tips:

>> EBS Volum Types:

a) SSD, General Purporse - GP2 - (Up to 10,000 lOPS)

b) SSD, Provisioned lOPS - 101 - (More than 10,000 lOPS)

c) HDD, Throughput Optimized - ST1 - frequently accessed workloads

d) HDD, Cold - SC1 - less frequently accessed data.

e) HDD, Magnetic - Standard - cheap, infrequently accessed storage

>> You cannot mount 1 EBS volume to multiple EC2 instances, instead use EFS [This File System could be shared].

>> Encrypttion supportes on all types of EBS volumes.

>> Snapshot of encrypted EBS are automatically encrypted.

>> Encryotion does not support for all instance types.

>> Existing EBS volume can not be encrypted.

>> Shared EBS volume can not be encryted.

>> Root EBS volumes cannot be encrypted, except using third party tool.

>> The EBS volume size, type, and IOPS can be changed when it is attached on an EC2 instance.

o. EC2 Family:

>> DR MC GIFT PX might be missing in some of the regions. Not all regions have all types of EC2 instance.

o. When setup EC2 instance, one subnet can not go across multiple availability zone.

o. When setup EC2 instance, add tag on an instance can help you check the where the bill is from which server.

o. When setup EC2 instance, in security group, you can indicate server access network protocal and associated port.

"My IP" means, if your server's IP matches this, you can login directly. The default value is "Anywhere".

o. T2/C4 only can be backed by EC2. C3/M3 are avaiable for SSD backed storage.

o. $ yum install httpd -y [install Appache Server]

$ yum update -u

$ service httpd start

$ /var/www/html is the default gateway folder, like /home/amos/public_html

$ ssh ec2-user@192.168.52.91 -i /home/amos/ssh_key_pair.pem

o. EC2 instance monitoring interval 5 minutes is free, but switch to 1 minute will cost bit more.

o. Exam Tips:

>> Termination Protection is turned off by default, you have to turn it on by yourself.

>> On an EBS-backed instance, the default action for the root EBS volume is to be deleted when the instance is terminated.

>> EBS Root Volumes of your DEFAULT AMI’s cannot be encrypted. But, You can also use a third party tool (such as bit locker etc) to encrypt the root volume,

or this can be done when creating AMI's in the AWS console or using the API.

>> Snapshot of data and root volume can be encrypted and attached to an AMI.

>> EBS encryption support boot volume data being copied to an EBS snapshot with encryption option.

>> Additional volumes can be encrypted. [The volumn that you created can be encrypted]

o. Security Group - server virtual firewall

>> When creating EC2 instance, in security group tab, you can choose:

a) SSH, Port 22, Source: 0.0.0.0/0 ==> IP/Mask means that all the IP can access to this server.

>> After EC2 instance up and installed Apache, create one HTML page. If you delete HTTP from security group, the webpage will be fobbiden to review immeidately.

a) Any changes that you applied on security group will be in effective immeidately.

>> All Inbound Traffic is Blocked By Default.

>> All Outbound Traffic is Allowed.

>> Changes to Security Groups take effect immediately.

>> You can have any number of EC2 instances within a security group.

>> You can have multiple security groups attached to EC2 Instances

>> Security Groups are STATEFUL.

a) If you create an inbound rule allowing traffic in, that traffic is automatically allowed back out again.

>> You cannot block specific IP addresses using Security Groups,instead use Network Access Control Lists.

>> You can specify allow rules, but not deny rules.

o. Steps of upgrade volume type:

>> AWS console ==> EC2 ==> Volumn ==> Action "Deatach" the target volumn [This action will garantee your file is consistency, even wait until disk write done]

==> make a snapshot of the volumn ==> Restore the snapshot on a upper level storage, such as "Provisioned IO" disk ==> Attach the volumn ==> Mount File System again.

o. AWS EBS volume usaually goes with RAID or 10, rarely chose 5.

o. To increase disk IO, you can create a RAID array by multiple AWS EBS individual volumes.

o. Problem - Take a snapshot, the snapshot excludes data held in the cache by applications and the OS.

This tends not to matter on a single volume, however using multiple volumes in a RAID array, this can be a problem due to interdependencies of the array.

>> So, how to make a snapshot of a disk array:

a) Stop the application from writing to disk.

b) Flush all caches to the disk.

c) How to archive above 2 pionts?

==> Freeze the file system.

==> Unmount the RAID Array.

==> Shutting down the associated EC2 instance.

o. To create a snapshot for Amazon EBS volumes that serve as root devices, you should stop the instance before taking the snapshot.

o. Snapshots of encrypted volumes are encrypted automatically.Volumes restored from encrypted snapshots are encrypted automatically.

o. You can share snapshots, but only if they are unencrypted. These snapshots can be shared with other AWS accounts or made public.

o. When you snapshot the boot volume, you must make a AMI image first, and then be able to launch EC2 instance afterwards.

o. AMI Type [based on root device]:

>> EBS backed volume ...................... It is a product of AWS created from an EBS volume.

>> Instance store backed volume ........... These images could be created by any third party amateur [ AWS console => Launch EC2 => Go to "Community AMI" to choose AMI ]

>> All AMIs are categorized as either backed by Amazon EBS or backed by instance store.

>> For EBS Volumes: The root device for an instance launched from the AMI is an AWS EBS volume created from an Amazon EBS snapsot.

>> For instance store volumes: The root device for an instance launched from the AMI ss an instance store volume created from a template stored in Amazon S3.

Since needing to fetching image from S3, the launch speed will be slower than EBS backed instance.

>> Sometime, the server needs to be reboot for maintenance. That is the reason why usually people choose EBS backed instances, not instance store backed.

>> Any instance store volume get reboot, the data will be lost.

>> The instance store instance usually stores temporary data, such as cache or buffer.

Also, in considering of price and disk I/O, people sometime choose instance store AMI.

>> AMI needs to be copied across regions for disaster recovery, and be allowed to purchase or sell in the marketplace.

o. Main difference between EBS backed and Instance Store volume is:

>> The instance launched from EBS can be stopped.

>> However, the instance launched from "instance store" can only be reboot or terminated. As long as the instance stop, all the data will be lost.

>> Previously, all the image are stored in instance storage instead of EBS volume.

o. Exam Tips - EBS vs Instance Store:

>> Instance Store Volumes are sometimes called Ephemeral Storage.

>> Instance store volumes cannot be stopped. If the underlying host fails, you will lose your data.

>> EBS backed instances can be stopped. You will not lose the data on this instance if it is stopped.

>> EBS volume is only in one AZ, but distributed across multiple facilities.

>> You can reboot both, you will not lose your data.

>> By default, both ROOT volumes will be deleted on termination, however with EBS volumes, you can tell AWS to keep the root device volume.

o. Elastic Load Balance:

>> Instances monitored by ELB are reported as: InService, or OutofService.

>> Health Checks check the instance health by talking to it.

>> Have their own DNS name. You are never given an IP address.

>> AWS load balancer only be resolved by domain alias name, not IP.

>> ELB is using access logs feature to capture all the client connection details.

>> ELB has health check feature. If the EC2 instance behind the EBL failed the health check, and then the traffic will be stopped routed to the instance.

>> ELB is designed only for single availability zone, not across-zone or across-region.

o. Cloud Wath:

>> What are the default monitoring metrics: CPU, Disk, Network, and Status. Not RAM.

>> What are the difference between CloudWatch and CloudTrail: Watch is for monitoring IT evnironment resource; Trail is for user action auditing, such as create a user.

>> Standard Monitoring = 5 Minutes

>> Detailed Monitoring = 1 Minute

>> Dashboards - Creates awesome dashboards to see what ishappening with your AWS environment.

>> Alarms - Allows you to set Alarms that notify you when particular thresholds are hit.

>> Events - Cloud Watch Events helps you to respond to state changes in your AWS resources.

>> Logs - Cloud Watch Logs helps you to aggregate, monitor, and store logs. Log agent is a AWS server embeded tool to send log data to cloudwatch.

o. IAM with EC2:

>> Use individual secret access number is insecure. You can create a IAM role, which is global without region limitation.

>> Use case: you need to create a application which can b login via Facebook, so you can grant IAM role to the EC2 instance without restore credential physically.

>> After creating IAM role, you can attach the role with EC2 instance. As long as EC2 instance granted with the role, it can access S3 without any credential.

o. Querying EC2 instance Metadata via HTTP API request:

>> Login EC2 instance.

>> curl http://169.254.169.254/latest/meta-data

>> curl http://169.254.169.254/latest/meta-data/public-ipv4

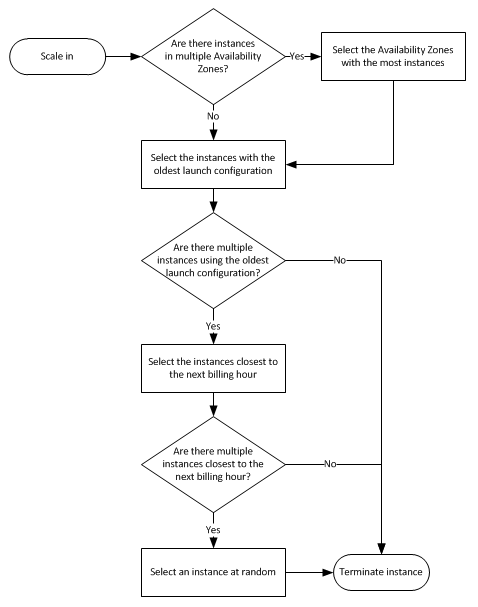

o. EC2 instance auto scaling:

>> You need to go to AWS console ==> EC2 instance ==> Auto Scaling Group ==> You need to create "Launch Configuration"

==> Then create "Auto Scaling Group" by indicating EC2 instance type.

>> Auto scaling group can contain EC2 instances across multiple AZ, but only distribute in one zone.

>> Auto scaling termiation policy:

>> All AMIs are categorized as either backed by Amazon EBS or backed by instance store.

>> For EBS Volumes: The root device for an instance launched from the AMI is an AWS EBS volume created from an Amazon EBS snapsot.

>> For instance store volumes: The root device for an instance launched from the AMI ss an instance store volume created from a template stored in Amazon S3.

Since needing to fetching image from S3, the launch speed will be slower than EBS backed instance.

>> Sometime, the server needs to be reboot for maintenance. That is the reason why usually people choose EBS backed instances, not instance store backed.

>> Any instance store volume get reboot, the data will be lost.

>> The instance store instance usually stores temporary data, such as cache or buffer.

Also, in considering of price and disk I/O, people sometime choose instance store AMI.

>> AMI needs to be copied across regions for disaster recovery, and be allowed to purchase or sell in the marketplace.

o. Main difference between EBS backed and Instance Store volume is:

>> The instance launched from EBS can be stopped.

>> However, the instance launched from "instance store" can only be reboot or terminated. As long as the instance stop, all the data will be lost.

>> Previously, all the image are stored in instance storage instead of EBS volume.

o. Exam Tips - EBS vs Instance Store:

>> Instance Store Volumes are sometimes called Ephemeral Storage.

>> Instance store volumes cannot be stopped. If the underlying host fails, you will lose your data.

>> EBS backed instances can be stopped. You will not lose the data on this instance if it is stopped.

>> EBS volume is only in one AZ, but distributed across multiple facilities.

>> You can reboot both, you will not lose your data.

>> By default, both ROOT volumes will be deleted on termination, however with EBS volumes, you can tell AWS to keep the root device volume.

o. Elastic Load Balance:

>> Instances monitored by ELB are reported as: InService, or OutofService.

>> Health Checks check the instance health by talking to it.

>> Have their own DNS name. You are never given an IP address.

>> AWS load balancer only be resolved by domain alias name, not IP.

>> ELB is using access logs feature to capture all the client connection details.

>> ELB has health check feature. If the EC2 instance behind the EBL failed the health check, and then the traffic will be stopped routed to the instance.

>> ELB is designed only for single availability zone, not across-zone or across-region.

o. Cloud Wath:

>> What are the default monitoring metrics: CPU, Disk, Network, and Status. Not RAM.

>> What are the difference between CloudWatch and CloudTrail: Watch is for monitoring IT evnironment resource; Trail is for user action auditing, such as create a user.

>> Standard Monitoring = 5 Minutes

>> Detailed Monitoring = 1 Minute

>> Dashboards - Creates awesome dashboards to see what ishappening with your AWS environment.

>> Alarms - Allows you to set Alarms that notify you when particular thresholds are hit.

>> Events - Cloud Watch Events helps you to respond to state changes in your AWS resources.

>> Logs - Cloud Watch Logs helps you to aggregate, monitor, and store logs. Log agent is a AWS server embeded tool to send log data to cloudwatch.

o. IAM with EC2:

>> Use individual secret access number is insecure. You can create a IAM role, which is global without region limitation.

>> Use case: you need to create a application which can b login via Facebook, so you can grant IAM role to the EC2 instance without restore credential physically.

>> After creating IAM role, you can attach the role with EC2 instance. As long as EC2 instance granted with the role, it can access S3 without any credential.

o. Querying EC2 instance Metadata via HTTP API request:

>> Login EC2 instance.

>> curl http://169.254.169.254/latest/meta-data

>> curl http://169.254.169.254/latest/meta-data/public-ipv4

o. EC2 instance auto scaling:

>> You need to go to AWS console ==> EC2 instance ==> Auto Scaling Group ==> You need to create "Launch Configuration"

==> Then create "Auto Scaling Group" by indicating EC2 instance type.

>> Auto scaling group can contain EC2 instances across multiple AZ, but only distribute in one zone.

>> Auto scaling termiation policy:

o. EC2 Placement Group:

>> A placement Zone is a logical grouping of instances within a singly AZ. Using placement groups enables applications to participate in a low latency, 10 Gbps network.

Placement groups are recommended for applications that benefit from low network latency, high network throughput, or both.

>> Basically, place group is for grid computing cluster based IT environment, which requires low latency network, such as Oracle RAC.

o. EC2 Placement Group Exam Tips:

>> A placement group can’t span multiple Availability Zones.

>> The name you specify for a placement group must be unique within your AWS account.

>> Only certain types of instances can be launched in a placement group (Compute Optimized, GPU, Memory Optimized, Storage Optimized)

>> AWS recommend homogenous instances within placement groups. Like, all the instance Family and type are same, which helps reducing network latency.

>> You can’t merge placement groups.

>> You can’t move an existing instance into a placement group.

>> You can create an AMI from your existing instance, and then launch a new instance from the AMI into a placement group.

o. EC2 Lambda:

>> A event driven function. Like 5 users send over 5 HTTP requests individually to API Gateway, 5 Lambda functions will be triggered.

>> What is Lambda - a combination?

==> Data Centres

==> Hardware

==> Assembly Code/Protocols

==> High Level Languages

==> Operating Systems

==> Application Layer/AWS APIs

==> AWS Lambda

>> AWS Lambda is a compute service where you can upload your code and create a Lambda function.

AWS Lambda takes care of provisioning and managing the servers that you use to run the code.

You don’t have to worry about operating systems, patching, scaling, etc.

You can use Lambda in the following ways:

==> As an event-driven compute service where AWS Lambda runs your code in response to events.

==> These events could be changes to data in an Amazon S3 bucket or an Amazon DynamoDB table.

As a compute service to run your code in response to HTTP requests using Amazon API Gateway or API calls made using AWS SDKs.

>> Programming language that Lambda support:

==> Node.js

==> Python

==> Java

==> C#

>> AWS Lambda pricing:

==> Number of requests:

First 1 million requests are free. $0.20 per 1 million requests thereafter.

==> Duration:

Duration is calculated from the time your code begins executing until it returns or otherwise terminates, rounded up to the nearest 100 ms.

The price depends on the amount of memory you allocate to your function. You are charged $0.00001 667 for every GB-second used.

>> Caution: Lambda only supports 5 minuts function duration.

>> Exam Tips - EC2 Lambda:

==> Lambda scales out (not up) automatically.

==> Lambda functions are independent, 1 event = 1 function.

==> Lambda is serverless.

==> Know what AWS services are serverless: API Gateway, DynamoDB ...

==> Lambda functions can trigger other lambda functions, 1 event can = x functions if functions trigger other functions.

==> Architectures can get extremely complicated, AWS X-ray allows you to debug what is happening.

==> Lambda can do things globally, you can use it to back up S3 buckets to other S3 buckets etc.

==> Know your triggers.

==> Maxium Lambda function execution time is 300 seconds.

o. Elastic File System:

>> It is as cluster shared drive.

o. Serverless:

>> Similar to Google platform serverless SMTP monitoring script.

>> General HTTP request router:

==> User browser ==> HTTP request for .html file ==> In html file, there is a javascript function xhttp(GET, "AWS-API-Gateway-URL")

==> In AWS console, you need to go to "API-Gateway" to create a trigger based on a Python function script

==> When the javascript request arrives API-Gateway, it will trigger the python script to perform pre-setup function.

<10> Route 53:

o. You can use both IPv4 and IPv6 with AWS.

o. Elabstic load balancer does not have any IPv4 or IPv6, just with DNS.

o. Domain name, such as emeralit.com is a naked domain without any prefix, such as www, mail, sale, etc.

o. Top Level Domains: These top level domain names are controlled by the Internet Assigned Numbers Authority (IANA) in a root zone database

which is essentially a database of all available top level domains.

o. Domain Registrars Because all of the names in a given domain name have to be unique. There needs to be a way to organize this all so that domain names aren’t duplicated.

This is where domain registrars come in.

o. A registrar is an authority that can assign domain names directly under one or more top-level domains.

These domains are registered with InterN IC, a service of ICANN, which enforces uniqueness of domain names across the Internet.

Each domain name becomes registered in a central database known as the WholS database.

Popular domain registrars include GoDaddy.com, 123-reg.co.uk etc.

o. SOA Records - The SOA record stores information about:

>> The name of the server that supplied the data for the zone.

>> The administrator of the zone.

>> The current version of the data file.

>> The number of seconds a secondary name server should wait before checking for updates.

>> The number of seconds a secondary name server should wait before retrying a failed zone transfer.

>> The maximum number of seconds that a secondary name server can use data before it must either be refreshed or expire.

>> The default number of seconds for the time-to-live file on resource records.

o. NS Records:

NS stands for Name Server records and are used by Top Level Domain servers to direct traffic to the Content DNS server which contains the authoritative DNS records.

o. A Records:

An “A” record is the fundamental type of DNS record and the “A” in A record stands for “Address”.

The A record is used by a computer to translate the name of the domain to the IP address.

For example http://www.acloud.guru might point to http://123.10.10.80.

o. TTL:

The length that a DNS record is cached on either the Resolving Server or the users own local PC is equal to the value of the “Time To Live” (TTL) in seconds.

The lower the time to live, the faster changes to DNS records take to propagate throughout the internet.

o. CNAMES:

A Canonical Name (CName) can be used to resolve one domain name to another.

For example, you may have a mobile website with the domain name http:llm.acloud.guru that is used for when users browse to your domain name on their mobile devices.

You may also want the name http://mobile.acloud.guru to resolve to this same address.

o. Alias Records:

Alias records are used to map resource record sets in your hosted zone to Elastic Load Balancers, CloudFront distributions, or S3 buckets that are configured as websites.

Alias records terminology is only valid with AWS. AWS load balancer URL only can be resolved by domain name, NOT IP.

Alias records work like a CNAME record in that you can map one DNS name (www.example.com) to another ‘target’ DNS name (elbi 234.elb.amazonaws.com).

Key difference - A CNAME can’t be used for naked domain names (zone apex). You can not have a CNAME for http://acloud.auru, it must be either an A record or an Alias.

o. Route 53 - Exam Tips:

>> ELB’s do not have pre-defined IPv4 addresses, you resolve to them using a DNS name.

>> Understand the difference between an Alias Record and a CNAME.

>> Given the choice, always choose an Alias Record over a CNAME.

>> Route 53 is a global service without any region sepecific.

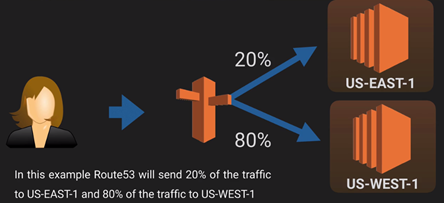

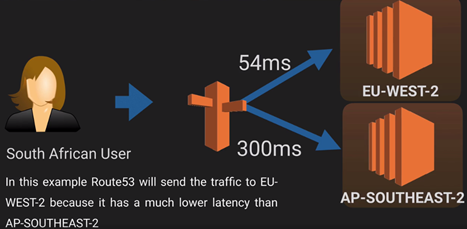

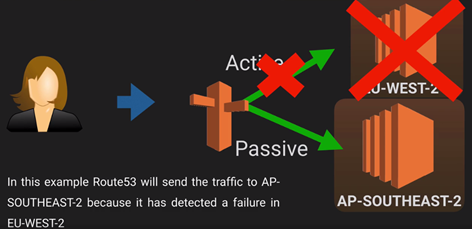

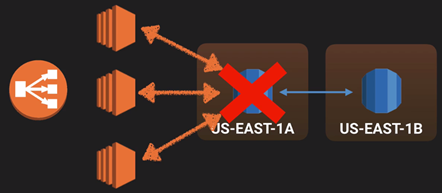

o. Route53 Routing Policies:

>> Simple:

o. EC2 Placement Group:

>> A placement Zone is a logical grouping of instances within a singly AZ. Using placement groups enables applications to participate in a low latency, 10 Gbps network.

Placement groups are recommended for applications that benefit from low network latency, high network throughput, or both.

>> Basically, place group is for grid computing cluster based IT environment, which requires low latency network, such as Oracle RAC.

o. EC2 Placement Group Exam Tips:

>> A placement group can’t span multiple Availability Zones.

>> The name you specify for a placement group must be unique within your AWS account.

>> Only certain types of instances can be launched in a placement group (Compute Optimized, GPU, Memory Optimized, Storage Optimized)